In-House vs Outsourced Moderation: What’s Right for Your Brand?

In-House vs Outsourced Moderation: What's Right for Your Brand?

Managing content moderation without breaking the bank or stretching your team too thin is one of the biggest challenges social media managers face. Every comment, message, and post needs attention, but at what cost?

Deciding between building an in-house moderation team and outsourcing it boils down to a fundamental assessment of your available resources and budget. Both approaches have their place, and finding the right fit can make the difference between a thriving online community and a brand reputation crisis waiting to happen.

That’s why in this blog post, we'll examine when each approach makes sense, explore hybrid models that combine the best of both worlds, and show you how the right moderation strategy protects your brand while keeping costs under control.

What is Content Moderation On Social Media?

Content moderation is when you monitor and manage content from online platforms like social media and networking websites so it's not offensive, obnoxious, or unsuitable for all age groups.

For social media platforms, content moderation is the process they have of reviewing and managing user-generated content to ensure it aligns with their community guidelines, legal requirements, and brand values, to create a safer and more respectful online environment.

In practice, this means users have complete freedom to post anything (views, experiences, feedback, etc.), as long as it doesn't break community guidelines. Social media platforms want to give flexibility to their users but at the same time they want to protect the community from things like hate speech or bullying, so it's a safe environment for everyone.

When content violates their community rules, platforms have many options. They might leave it up if it serves the public interest but limit how many people see it. Or they might remove it entirely if it’s harmful to the community. And users who repeatedly break their guidelines can face suspension or permanent bans.

Platforms enforce these rules using a combination of technology and human reviewers. Instagram, for example, uses AI systems along with real people to find and review content that breaks their community standards.

AI handles most of the initial screening process. It can automatically detect and remove rule-breaking content before anyone even reports it. When the AI isn’t certain about something, it flags the content for human reviewers to make the final decision.

What Are The Benefits Of Content Moderation On Social Media?

Taking content moderation seriously brings real, measurable benefits to brands and businesses. Here are some of them:

Protect Your Brand Reputation

Content moderation protects a brand's reputation by carefully monitoring what they post so it doesn't affect how people see them.

For instance, when a brand shares or doesn't remove offensive or controversial content from their channels, it seriously damages how consumers view them and changes public opinion for the worse.

Improve User Experience

When platforms do content moderation well, the overall quality of what users see improves, which naturally makes the experience more enjoyable.

For example, moderation helps create a sense of safety and trust. When people feel like a platform is watching out for them, they're more likely to feel comfortable using it. That feeling of safety goes a long way in building a positive experience.

Reduce Security Threats

Content moderation reduces security threats by filtering out malicious content like phishing messages and deceptive posts.

.webp)

Attackers often use direct messages and public posts to carry out social engineering attacks to trick users into revealing sensitive information. They then use this information to create more damaging attacks, like account takeovers or to steal financial data.

What's worse is that phishing attacks sometimes escalate into whaling, which is a targeted form of phishing that's used to target high-value individuals in an organization.

Whaling works using detailed personal or professional information that's used to impersonate executives or trusted personnel with access to sensitive information. In these types of attacks, attackers use PII to create targeted messages that manipulate victims into transferring funds or sharing confidential data.

Some of the most common whaling targets include C-suite executives, but attackers also go after others in key positions. For example, Human Resources personnel are targeted for access to employee records. And finance staff are attractive because they process payments and approve transactions.

Content moderation detects and blocks these threats early. It scans for known phishing patterns, removes harmful links, and reports fake accounts, which reduces the exposure to these social engineering attempts.

When Should You Do Content Moderation In-House?

Businesses should do content moderation in-house when they need a high level of control over the entire moderation process and when they need control over how their brand is presented online.

Having a team under the same roof makes it easier to quickly adjust moderation guidelines to reflect new trends, campaign goals, or audience behavior across social media platforms.

Managing social media moderation in-house also gives teams a clearer view of how users interact with their brand in real-time. That helps spot potential issues, like offensive comments, misinformation, or spam, before they become big problems.

An internal team ensures more consistent enforcement of moderation policies across all social channels. Lastly, it's better to train team members on the brand's tone, values, and messaging so they're better equipped to handle tricky or controversial topics over the long run.

When Should You Outsource Your Content Moderation?

Businesses should outsource their content moderation when they need to save time, reduce costs, and quickly scale their moderation attempts.

Content moderation services often come with experienced teams that are already trained to handle large volumes of content efficiently.

Using content moderation services gives businesses immediate access to specialized expertise that stay up to date on the latest online threats and moderation best practices, which helps strengthen overall platform security and user safety.

Outsourcing also improves speed. A dedicated external team can handle moderation around the clock, so it reduces response times and keeps harmful or inappropriate content off your platforms more effectively.

For companies with fast growth or high volumes of user-generated content, this route is often more cost-effective than building and managing an in-house team.

When Should You Follow A Hybrid Model?

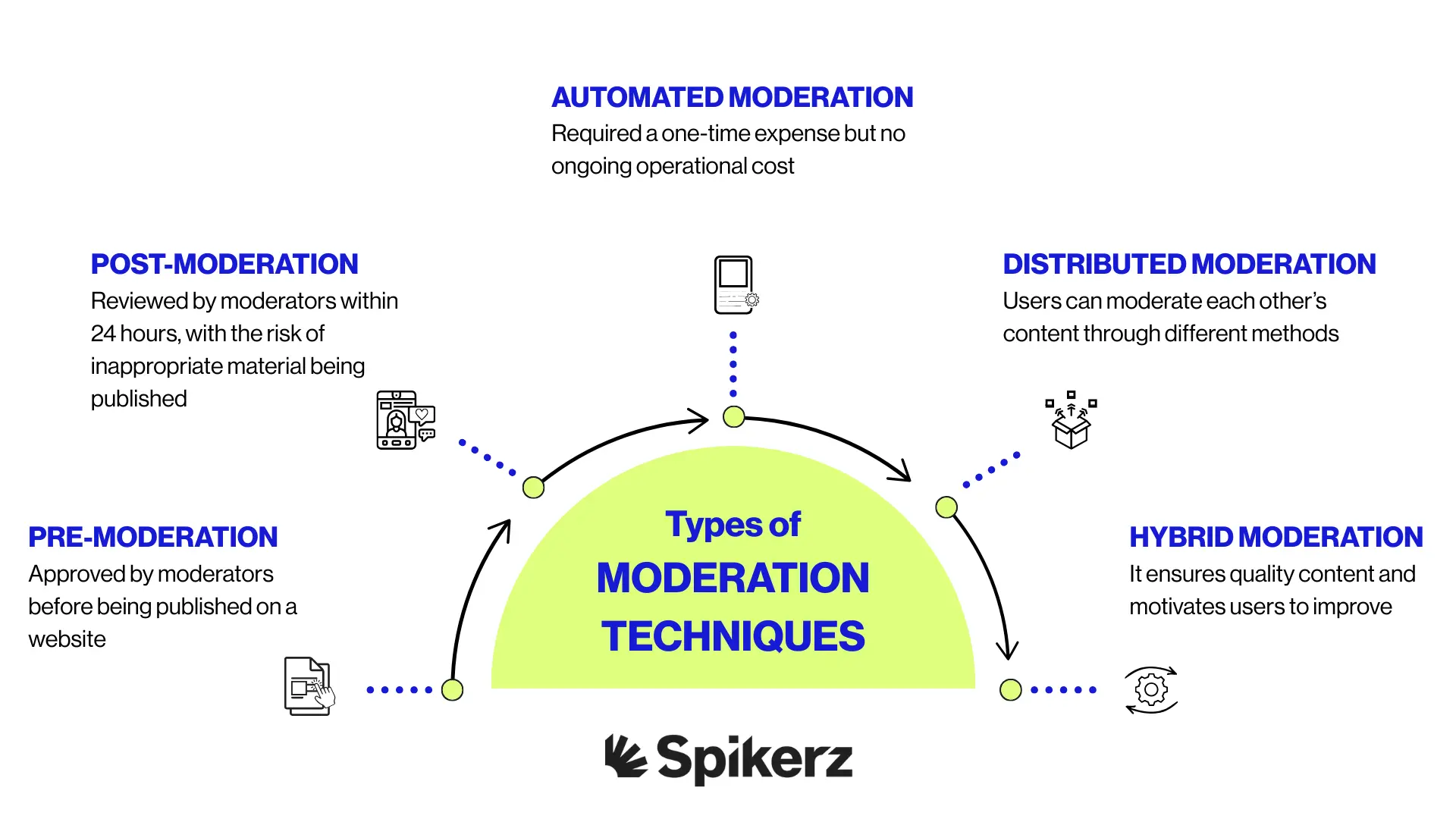

Hybrid moderation is a mix of different methods. For example, automated tools might check content first, and then human moderators review what's flagged. This way, the moderation is fast, but it still keeps things accurate.

A hybrid approach can also mean leveraging the strengths of both in-house and outsourced moderation. This could mean:

- In-house teams handle complex, sensitive, or high-priority content that requires deep brand understanding.

- Outsourced teams manage the bulk of routine content review, offering scalability and 24/7 coverage.

Ultimately, the best choice depends on your business's specific needs, size, goals, and resources.

What Content Moderation Services Exist?

Content moderation services cover many different areas, each designed to address specific aspects of online activity. For example:

1) Social Media Monitoring

Social media monitoring covers a wide range of services, from tracking direct mentions to detecting security threats. Here are the key types and how they support your brand:

Brand Engagement Tracking

Apart from tags and mentions, monitoring services also scan indirect references, like comments or posts that mention your product or campaign without tagging your profile. This helps you engage in relevant conversations, even if you're not directly invited, and it ensures no important feedback or opportunity fails to be noticed.

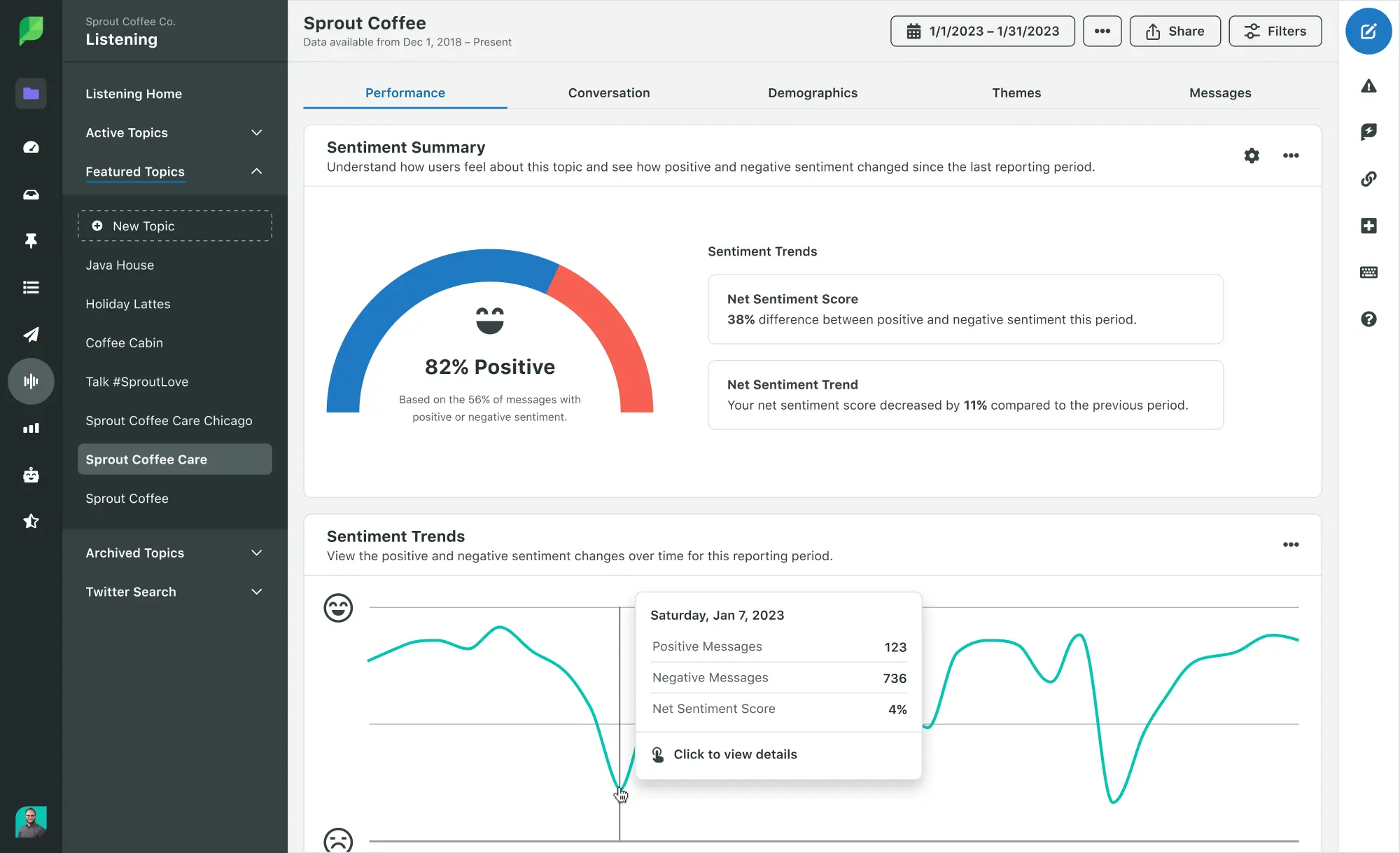

Audience Sentiment Analysis

Audience Sentiment Analysis is all about understanding the tone behind brand mentions. These services analyze user tone, language, and engagement patterns to understand public sentiment. So instead of relying only on surveys, emails, or community feedback, you get real-time sentiment data that helps you stay alert to shifts in public opinion and act before any small issues turn into bigger ones.

Campaign Performance Insights

Campaign Performance Insights zoom in on specific campaigns. They gather qualitative feedback, collect customer reactions, comments, and share content that reflects how users perceive your campaign past likes or shares. All of this adds depth to your reporting, helping your team understand what's working and what needs to be adjusted.

Crisis Detection And Response

As you know, not every post is positive. Monitoring services flag spikes in negative sentiment and identify viral posts that could harm your brand's reputation. Getting quick alerts allow your team to respond quickly, manage public opinion, and address issues before they go out of control.

Influencer Discovery

.webp)

Monitoring tools can uncover opportunities too, like identifying influencers and potential brand advocates who already talk about your product or niche even if they've never tagged your profile. These types of tools are great for helping marketing teams find creators who align with your brand and start meaningful partnerships based on engagement.

Security Monitoring And Protection

Many services watch for suspicious activity like fake accounts, phishing attempts, and other social engineering tactics that try to trick employees or followers into revealing sensitive PII.

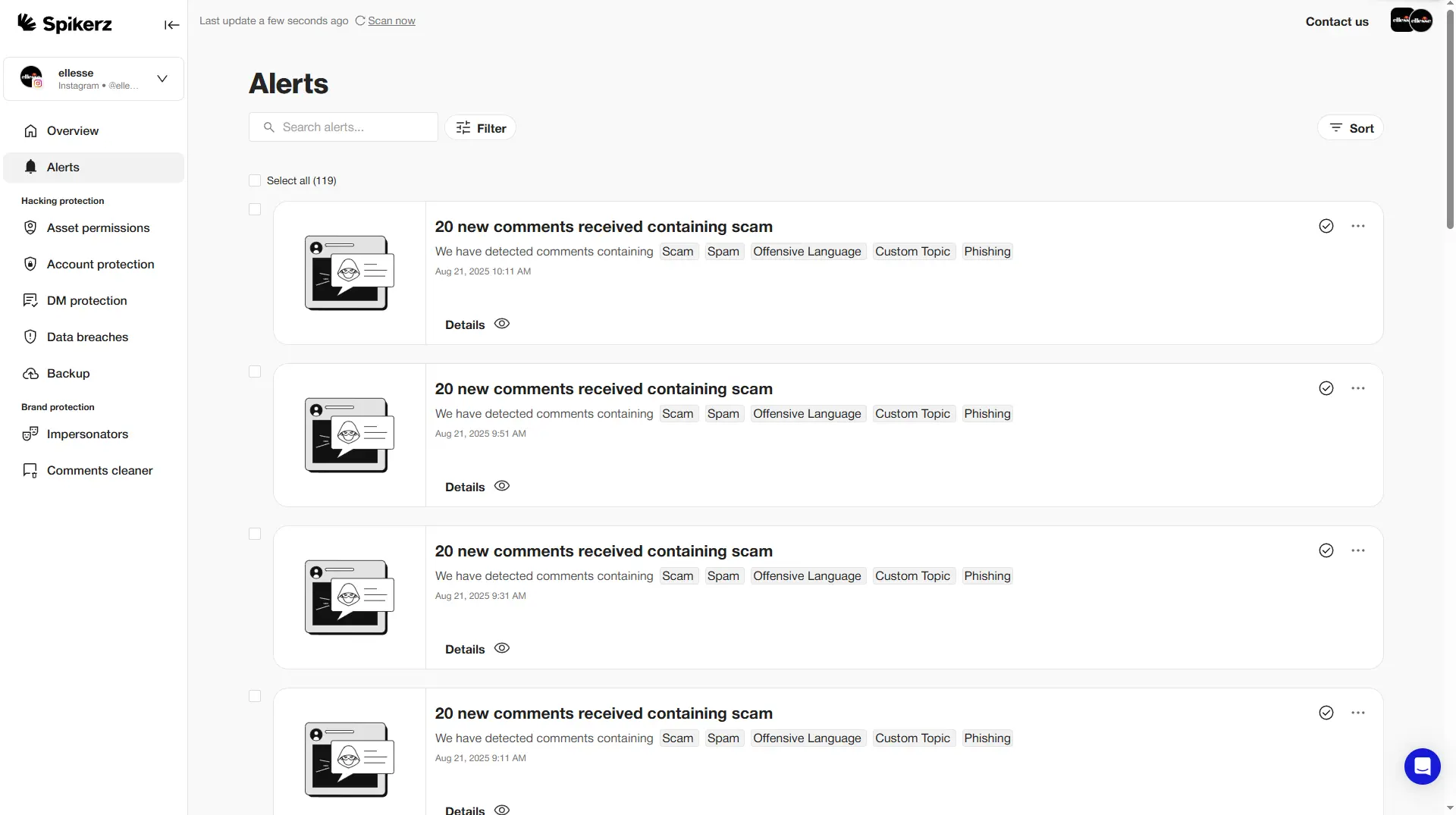

2) Comment Moderation

Another important area of content moderation is managing comment sections across posts, live streams, groups, and brand pages. These services help keep conversations clean, respectful and on-topic. It doesn't matter if they're happening in real-time during a live stream or in the comments of a post that's gaining traction.

Comment moderation tools detect and remove spam, hate speech, offensive language, and off-topic remarks before they damage your brand's reputation or make a mess of the community experience. They're especially useful for brands that get a lot of comments and want to grow healthy, engaged communities on social media platforms or forums.

3) Content Moderation

Content moderation focuses on the material your company publishes across social media channels and ensures every post aligns with your values, messaging, and platform guidelines. These services make sure your content is appropriate, consistent, and fully compliant with each platform's community standards to avoid bans, takedown, or shadowbans.

Their main role is to maintain a healthy and cohesive online identity. They review text, images, videos, and links in posts to make sure everything reflects your brand's tone and ethics and to minimize the risk of content violations.

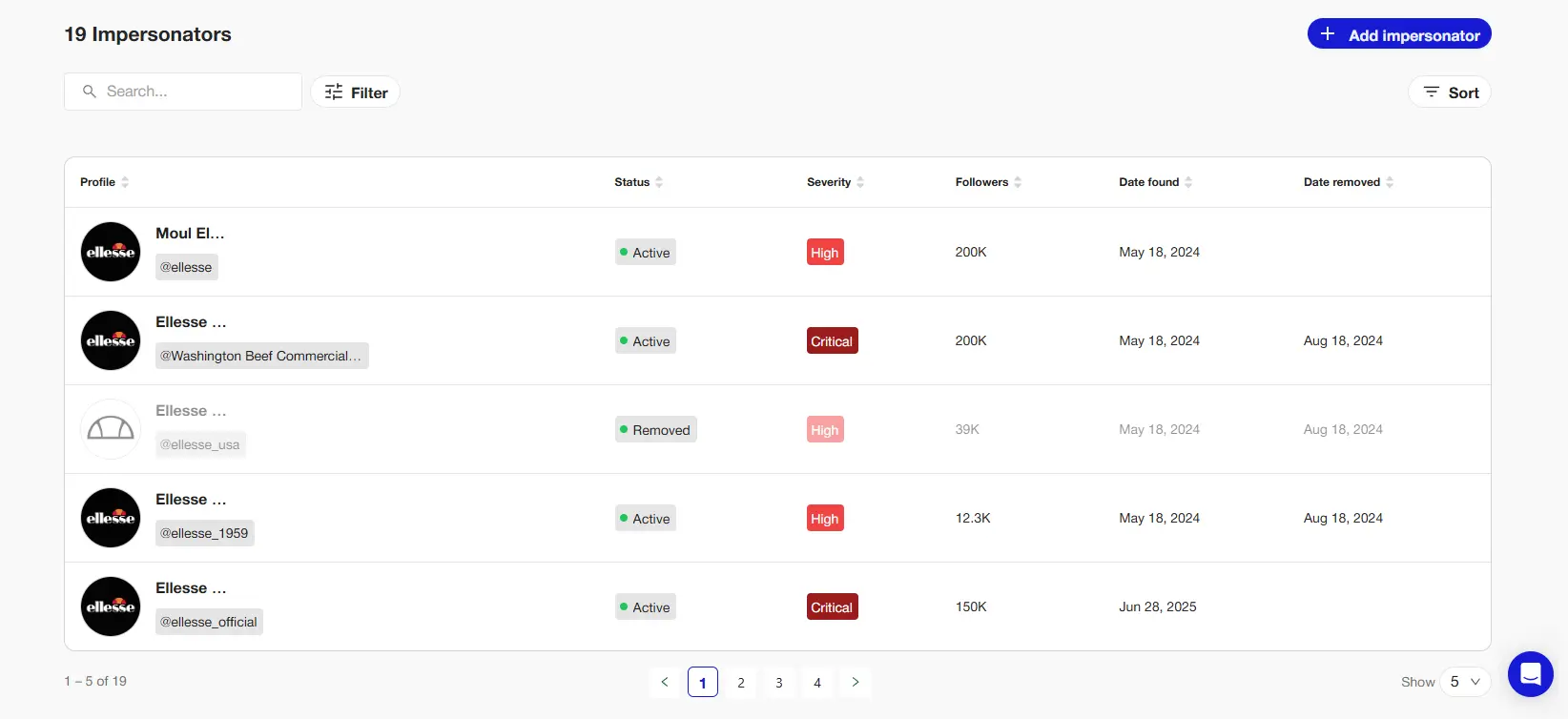

4) Brand Impersonation Monitoring

Protecting your brand from impersonation is just as important as moderating content and comments. Brand impersonation monitoring services identify and track fake profiles, pages, or accounts that impersonate legitimate businesses on social media.

They focus on identifying unauthorized use of brand assets, like logos, profile pictures, post content, usernames, social media links, and even spoofed websites.

They monitor platforms for any misuse of your brand's identity, whether it’s your visual style, tone of voice, or messaging. Impersonators often create lookalike accounts to trick users, collect personal or payment information, distribute malware, or run fake promotions and giveaways to harvest data.

These services understand that brand impersonation takes advantage of consumer trust. When users see a familiar logo or brand name, they're more likely to engage so it makes them vulnerable to scams.

Once impersonation is detected, these services report the offending accounts to the platforms for removal, which helps protect your brand from reputational damage and prevents users from being misled into thinking they're interacting with an official channel.

How Spikerz Can Help You Moderate Your Content

Spikerz helps you moderate your content across every key area of your social media presence, whether you want to manage it in-house, have us handle it for you, or use a hybrid approach that fits your workflow.

We support comment moderation, helping you keep your pages, posts, and groups clean and respectful. It doesn't matter if it's spam, offensive language, or off-topic content. Our team or yours, using our tools, can filter it out in real time to protect your community and brand image.

For content moderation, Spikerz makes sure everything your brand posts aligns with platform guidelines and your own internal values. It helps you avoid takedowns, shadowbans, or mixed messaging that can hurt your credibility.

We also offer robust brand impersonation monitoring. Our tools scan for fake accounts, stolen brand assets, and suspicious activity. When we spot impersonators, we take immediate action to report and remove them. That way we protect your brand and your audience from fraud.

In short, Spikerz gives you the flexibility to choose the moderation model that works best for your business, whether that's only you, outsource it completely, or strike a balance.

Conclusion

Choosing between in-house, outsourced, or hybrid moderation depends on your specific needs, resources, and growth trajectory. What matters most is that you have a system in place. Without proper moderation, your brand remains vulnerable to attacks that can destroy customer trust and tank your reputation overnight.

Remember, the cost of poor moderation is much higher than the investment in proper protection. Whether you build an internal team, partner with external specialists, or use tools like Spikerz to create a customized approach, taking action now saves you from becoming tomorrow's cautionary tale. Your brand's future depends on the moderation decisions you make today.

.webp)