What Is Disinformation in Cybersecurity? A Beginner's Guide

What Is Disinformation in Cybersecurity? A Beginner's Guide

GenAI and social media have handed malicious actors unprecedented power to flood the internet with convincing lies. What used to take huge teams of people, weeks to create, now happens in a few minutes: deepfakes, fabricated news articles, and synthetic social media posts that look real.

As a result, organizations worldwide are scrambling to counter these campaigns with dedicated technologies and defensive strategies. The days of hoping your business won't be targeted are over. Every company needs to understand how disinformation works, who's behind it, and what you can do to protect your brand.

In this guide, we break down the mechanics of modern disinformation campaigns, examine real-world examples that shook global markets, and reveal defensive strategies that actually work.

What Are Disinformation Campaigns?

Disinformation campaigns are deliberate actions to spread false information and mislead people. In practice, that means using lies as tools to manipulate public opinion, damage reputations, or achieve political and financial goals.

But don’t confuse disinformation with misinformation. Misinformation is false information too, but it’s shared without the intention of deceiving people. Although the intent is different, they have similar results: confusion, mistrust, and real-world consequences for both businesses and individuals.

Disinformation campaigns are nothing new but they have become more prominent with the speed and reach of social media platforms. Plus, they have spread in websites, forums, streaming platforms, and cable channels, and circulate widely before they're ever questioned.

How Do Disinformation Campaigns Work?

Disinformation campaigns work by spreading falsehoods (content like videos, memes, or doctored photos) via social media platforms to reach wider audiences. They can also be spread by automated accounts (bots) to create an illusion of widespread support or opposition, influencing public opinion.

Other ways to do it include word-of-mouth, speeches, group text messages, news/opinion programming or streams, blog posts, and nearly any other forms of material can be used. The complexity can go from simple text posts to elaborate multimedia content that can even trick careful viewers.

And the most recent tactic has been using deep-fake technology, which allows for even easier ways of creating disinformation campaigns.

For example, bad actors can create videos using this technology to target political figures and make them seem like they're making inflammatory statements that they never actually made. A deepfake might show a candidate making controversial statements right before an election, leaving little time for fact-checking or clarifying their actual position.

The purpose of these campaigns depends on the goals of the bad actors. Some want to polarize audiences, stir up anger or confusion so that people turn against one another or lose confidence in leaders.

Others focus on the financial aspect, and they use disinformation to manipulate stock prices, trick employees into handing over sensitive data, or to open the door to cyberattacks.

To achieve these goals, attackers rely on tactics like:

- Using generative AI to produce misleading content at scale before organizations have a chance to respond

- Creating convincing phishing emails

- Exploiting vulnerabilities in workforce collaboration tools and call centers

- Using malware to steal credentials

- Initiating account takeovers

How Does Disinformation Spread?

Disinformation spreads so quickly because it relies on people themselves to push it forward. Bots and fake accounts also play a role, but the bigger issue is that regular users often share false content without realizing it.

Each share gives that information access to a wider network, and with every step, its reach grows, creating a ripple effect that makes misinformation travel further and faster than the truth. In fact, studies show that false information spreads 6x faster than accurate news on social media platforms.

What makes it especially powerful is when it gains access to large networks. For example, if a public figure, an influencer, or organization with a big following shares it, it immediately reaches thousands or even millions of people. And that amplification can give disinformation momentum that is hard to stop, spreading even more quickly.

In short, the combination of human sharing and the power of large networks are the reason why disinformation moves fast and penetrates deeply online.

Examples of Disinformation Campaigns

Disinformation campaigns aren't theoretical threats. They’re happening right now, targeting governments, businesses, and individuals worldwide.

In fact, here are two major operations that show the scale and sophistication of modern disinformation warfare:

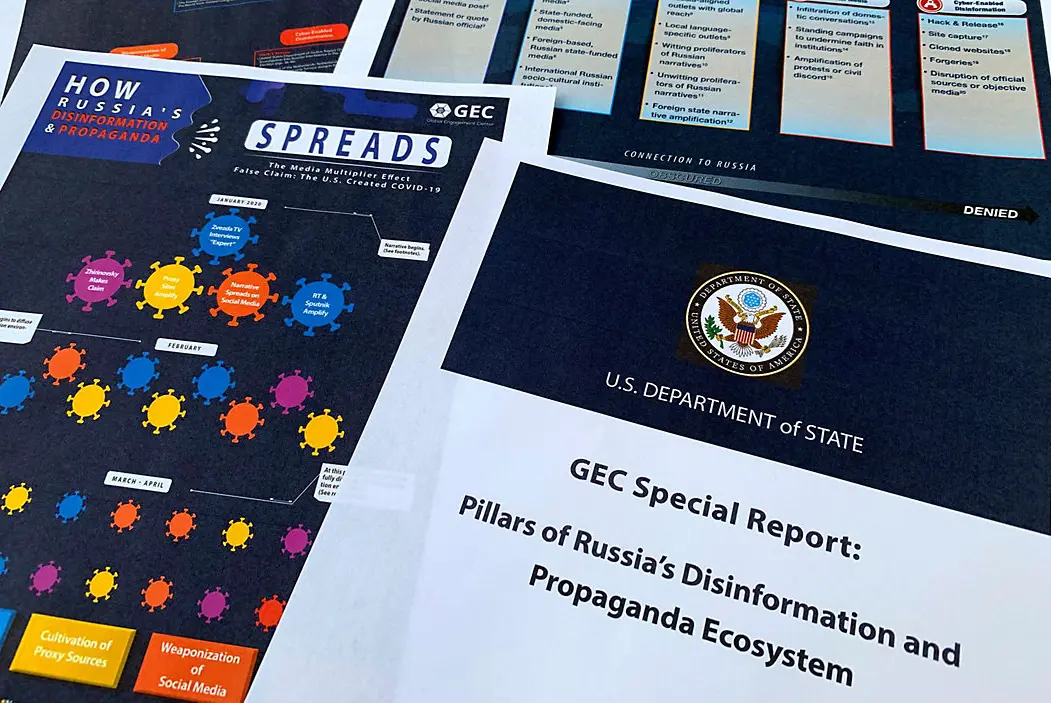

1) "DoppelGänger" – Russian Disinformation Campaign

The Doppelgänger campaign is a disinformation operation run by the Russian Social Design Agency and Structura National Technologies. Active since at least May 2022, it creates fake versions of trusted media outlets, government sites, and think tanks to spread pro-Russian narratives disguised as legitimate news.

Its goal is to undermine trust in Western institutions, weaken support for Ukraine, and reinforce Kremlin propaganda. It does this by portraying Ukraine as corrupt, denying events like the Bucha massacre, and warning Europeans that sanctions on Russia will harm their lives.

The campaign works by using generative AI to produce fake articles, buying domains that mimic real outlets, and adding links to credible sites for authenticity. Social media bots, fake accounts, and paid ads then amplify this content across platforms like Facebook and X, blurring the line between the truth and disinformation, and reaching wide audiences quickly.

2) Chinese Disinformation Campaigns

Chinese disinformation campaigns are rooted in the Communist Party's "Three Warfares" strategy, which combines public-opinion, psychological, and legal warfare to shape perceptions and advance its interests.

Here’s how each of the three warfares works in practice:

- Public-opinion warfare uses media and propaganda to control narratives, strengthen unity at home, and weaken opponents’ will.

- Psychological warfare undermines an adversary's decision-making and morale, and fuels internal divisions to create instability.

- And legal warfare delegitimizes adversaries using national and international law, and claims legal superiority in disputes.

In practice, these campaigns are central to China's influence operations in the Asia-Pacific. They are used to discourage Taiwan from pursuing independence and to challenge the Philippines' maritime claims in the South China Sea.

The work is carried out by several state actors, including the PLA's Strategic Support Force for cyber operations, the Ministry of State Security for covert activity, the Central Propaganda Department for propaganda, and the Ministry of Public Security for internet control.

What Tools Do Attackers Use In Disinformation Campaigns?

Attackers use a mix of tools, some old tricks, and some cutting-edge tech to make disinformation campaigns more convincing and harder to detect. Here’s a brief overview of the tools they use:

Bots And Automation

These are computer programs that act like real people online. They can post, like, share, and comment at massive scale (thousands at once), making it seem like a message has huge support when it's really just machines amplifying it.

Generative AI

Generative AI are tools that create fake but realistic-looking content, including deepfakes, where someone's face or voice is convincingly altered, and AI-written text that sounds human. These fakes can trick people into thinking a politician, celebrity, or expert said or did something they never did.

Micro-targeting

Instead of blasting one message to everyone, attackers use data (like your online habits, interests, or location) to send tailored messages to people most likely to believe or share them.

Geofencing

Geofencing sets up a virtual boundary around a geographic area, like a neighborhood, city, or even a protest site, so that only people in that area see the disinformation. It makes the message feel more local and personal.

Proxy Websites

Proxy websites are fake news sites that look legitimate and that are designed to mimic trusted media outlets. They publish false or misleading stories to give disinformation a "credible" tone.

How Can You Defend Against Disinformation Campaigns?

Your business doesn't have to be a sitting duck for disinformation attacks. There are three powerful defense strategies that can protect your organization from becoming the next victim of coordinated false information campaigns.

1) Technological Solutions

One way to defend against disinformation campaigns is through technology. Artificial intelligence and machine learning can spot patterns in false content, flag anomalies, and filter out misleading material before it spreads.

Alongside this, content authentication is becoming a key line of defense. Techniques like watermarking and adding provenance information help verify the authenticity of digital media, making it harder for manipulated or AI-generated content to pass as real.

Tools that strengthen individual and organizational security are also important, since disinformation often spreads through compromised accounts or coordinated bot activity.

Spikerz is one solution that helps in this space. It protects social media accounts by detecting and blocking takeover attempts, bot-driven attacks, and phishing scams. Our platform monitors your accounts 24/7, catching suspicious activity before damage occurs.

It also defends against social engineering tactics like impersonation by monitoring suspicious activity and shutting down fake accounts. It removes them from social media so your audiences only interact with your real accounts.

If attackers can't hijack your official channels, they lose a critical vector for spreading disinformation.

2) Human Behavior And Media Literacy

Technology alone can't stop disinformation, which is why human behavior and media literacy play such an important role.

If you understand what disinformation is and how it works, you become more alert to misleading narratives. So, when you learn to recognize the signs of false information, practice critical thinking, and verify sources before you share something, you help limit its reach.

That’s why you should train your employees to spot deepfakes, verify unusual requests, and question suspicious content to create a human firewall against disinformation.

A community that is better informed, more skeptical, and careful with online engagement is harder to manipulate. Strengthening media literacy not only protects you (individuals) but also builds resilience across society, making disinformation less effective overall.

3) Collaboration Between Sectors

Protecting against disinformation also requires strong collaboration across sectors. Governments, technology companies, and civil organizations each bring unique strengths, and working together allows them to share resources, intelligence, and best practices.

International cooperation adds another layer of defense by aligning their efforts across borders so it makes it harder for disinformation campaigns to exploit gaps between countries. If malicious actors can't move operations to less-protected regions, their effectiveness drops significantly.

When these partnerships are in place, technology professionals play a central role in building tools and strategies that identify, disrupt, and reduce the impact of false narratives. As a result, this kind of coordinated approach makes the overall defense against disinformation far more effective.

Conclusion

Disinformation campaigns have grown from minor annoyances into powerful tools that threaten businesses, democracies, and public trust itself. But you're not defenseless. If you understand how these campaigns work, you’ll be able to spot attacks before they gain momentum.

The most successful defense combines all three approaches: technological shields, educated employees, and collaboration with other organizations facing similar threats.

In the battle against disinformation, isolation makes you vulnerable, but united defense makes you nearly impossible to defeat.