How To Protect Your Business From AI Impersonation

How To Protect Your Business From AI Impersonation

AI and machine learning tools have become standard weapons in cybercriminals' arsenals for launching disinformation campaigns. These technologies enable bad actors to create convincing fake content at unparalleled scale and speed. As a result, disinformation campaigns now rank among the top cybersecurity threats worldwide.

In this blog post, we'll explore what AI impersonation is, how these attacks work, examine real-world examples of disinformation campaigns, and provide proven strategies to protect your business from these evolving threats.

What Is AI Impersonation?

AI impersonation happens when bad actors use artificial intelligence to create realistic-looking or sounding depictions of people. This technology manipulates images, videos, or audio to make someone appear to say or do things they never actually said or did.

The process involves machine learning algorithms that analyze existing media to generate synthetic content. On their own, these tools serve legitimate purposes in entertainment, education, and creative industries; but what makes them dangerous is that they are often used for malicious purposes like scams, fraud, and disinformation.

What’s worse is that the technology's accessibility and improving quality make it increasingly difficult for untrained people to distinguish between authentic and synthetic content.

How Does AI Impersonation Work?

AI impersonation technology relies on machine learning algorithms to create convincing representations of real people. The process typically follows four distinct steps that transform raw data into convincing deepfakes:

- Data collection phase: Scammers gather audio, video, or image samples of their target from social media profiles, public videos, interviews, or other online sources. The more data they collect, the more convincing their final product becomes.

- Algorithm training: Machine learning models analyze the collected data to learn the person's unique voice patterns, facial features, expressions, and mannerisms. Advanced algorithms can extract these characteristics from surprisingly small amounts of source material.

- Synthetic content generation: The trained models create fake voice recordings or deepfake videos that mimic the target person. Modern AI can generate this content in real-time, making live impersonation possible.

- Deployment and distribution: Attackers use this synthetic content to impersonate targets in phone calls, video conferences, social media posts, or other communications. The goal is to deceive victims into believing they're interacting with the authentic person.

What Is an Example of a Disinformation Campaign?

Generative AI existed before 2022, but it became widely accessible when ChatGPT launched and democratized these powerful tools. Since then, people have discovered countless applications for AI technology, including assisting in creating large-scale disinformation campaigns.

Malicious actors now use AI impersonations to manipulate public opinion at critical moments through coordinated social media influence operations and fake news websites. These campaigns exploit the trust people place in familiar voices and faces to spread false information.

Election interference is a particularly concerning application of disinformation campaigns. Bad actors launch these operations with the specific goal of influencing voting outcomes and undermining democratic processes. While these campaigns have gained traction worldwide, they've become particularly problematic in Europe where operations target multiple countries simultaneously.

For example, a Russian disinformation campaign used AI-generated fake news articles and deepfake videos to impersonate established European news outlets, aiming to sow distrust in EU institutions and influence public opinion before critical elections.

Why Is AI Impersonation So Dangerous?

AI impersonation presents major risks because the technology has become democratized and accessible to anyone with basic technical skills. Also, the quality of AI-generated content has improved dramatically, making it extremely difficult for untrained people to distinguish real content from fakes.

This combination of accessibility and quality is the perfect storm for malicious activities across multiple attack vectors. For example:

Account Takeovers

Account takeovers happen when attackers gain unauthorized access to user accounts on social media platforms like LinkedIn, X, Facebook, Instagram, and YouTube. Cybercriminals use many methods to compromise accounts, including social engineering, phishing campaigns, malware that records keystrokes, exploiting stolen or weak passwords, and leveraging security vulnerabilities in platform infrastructure.

These types of attacks are particularly dangerous because they cause significant damage to both brands and individual users. When an organizations’ official accounts are compromised, their image can face damage in public opinion, direct financial losses, and operational downtime. On the user side, they typically experience direct financial losses when attackers use hijacked accounts to spread scams through trusted channels.

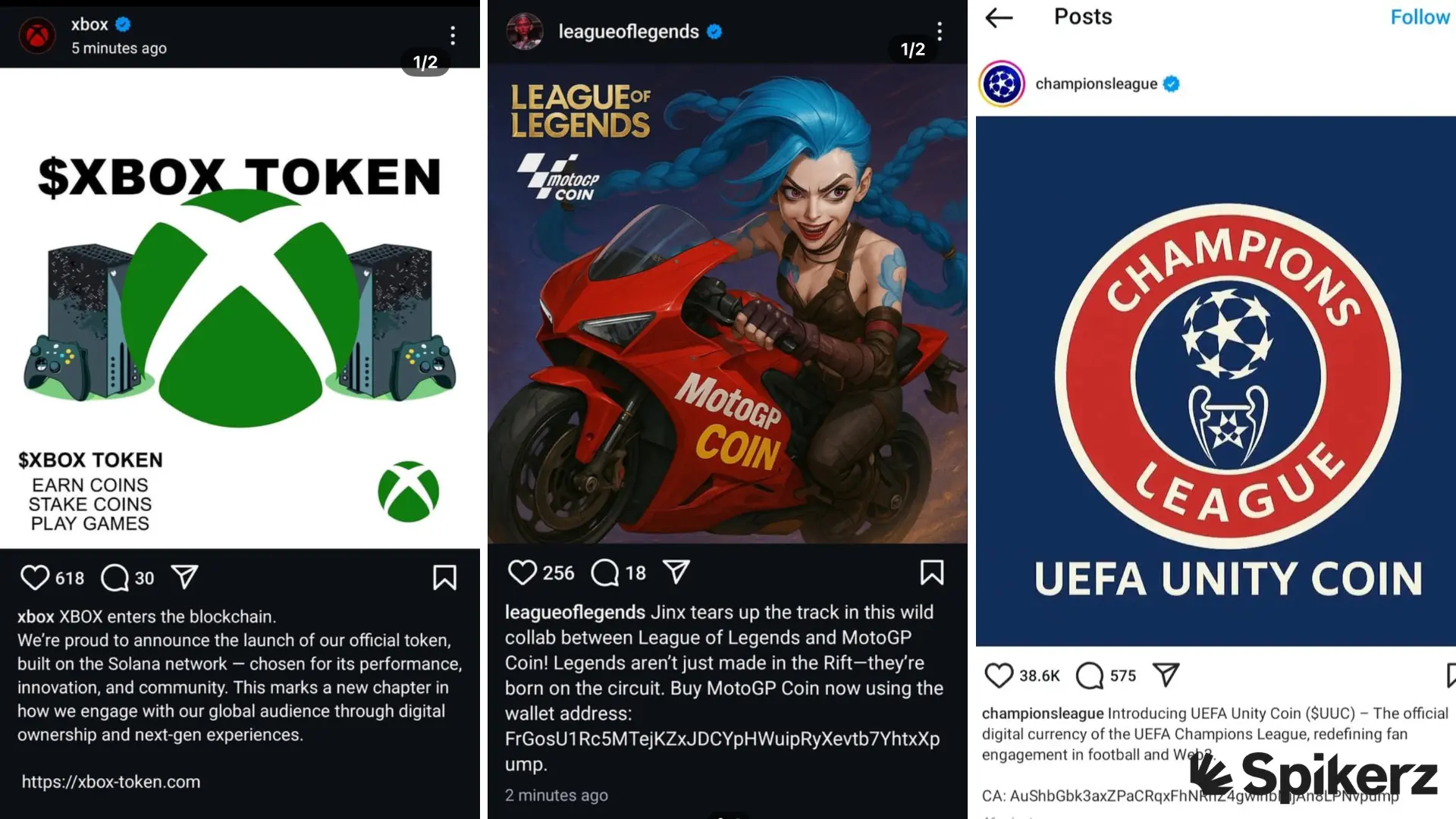

Here are some of the high-profile examples we’ve reported on in the past month alone:

- UEFA's Instagram account was compromised to promote a cryptocurrency meme coin scam, damaging the organization's reputation and defrauding followers.

- Riot Games' League of Legends Instagram fell victim to hackers who used the platform to promote cryptocurrency scams.

- Riot's VALORANT Instagram account was hijacked to spread similar cryptocurrency scams to their gaming audience.

- TRON DAO's X account was compromised and used to promote meme coin scams targeting cryptocurrency enthusiasts.

- The New York Post's X account was hacked to send direct messages inviting users to fake podcast appearances. When they agreed, they were given a physical address, potentially setting up victims for physical crimes. Thankfully, the scam ultimately failed.

- AJ Styles' X account was compromised through a SIM swap attack and used to promote a cryptocurrency scam.

Disinformation Campaigns At Scale

Disinformation campaigns typically target larger organizations and public figures instead of individual consumers. However, as your business grows and gains visibility, it may become an attractive target for these types of attacks.

These attacks are specially concerning because generative AI improves the quality and scale of disinformation campaigns. When attackers have sufficient data to train specialized models, they can create content that mimics your brand's voice, visual identity, and communication patterns across multiple platforms.

For example, the European election interference campaign we mentioned earlier shows how AI enables global-scale operations. Russian operatives created fake European news outlets using AI-generated articles and deepfake videos that looked real to casual observers, successfully reaching millions of users across multiple countries.

However, these attacks aren't limited to politics or specific geographic regions. We're seeing similar campaigns across industries worldwide, targeting businesses, organizations, and public figures. European campaigns often receive more media attention due to current geopolitical tensions, but the threat landscape spans all sectors and regions globally.

Creating Phishing Campaigns

We’ve said this many times, phishing is the most common attack vector in cybersecurity, and AI is transforming how these campaigns operate. Because of that, you might expect AI to dramatically increase the volume of phishing emails and social media messages, but that’s not the case.

According to SlashNext's Phishing Intelligence Report, phishing emails increased by 202% in the second half of 2024. However, Hoxhunt's analysis found that only 0.7% to 4.7% of phishing emails were written entirely by AI.

Which likely means that AI isn't generating complete phishing campaigns but assisting in creating specific components or improving existing content. Attackers use AI to improve language quality, personalize messages, and create more convincing impersonations instead of automating entire operations.

For example, during the Sony Pictures breach, attackers posed as colleagues of senior executives, convincing them to open malicious attachments that led to the theft of over 100 terabytes of confidential data and losses exceeding $100 million.

That’s why organizations need comprehensive approaches that combine technology, training, and processes to stay ahead of these attacks. The goal is to reduce risk, increase transparency, and expand security capabilities across all potential attack vectors.

How Can You Protect Your Business From AI Impersonation?

The good news is that organizations worldwide are recognizing the serious threat posed by AI and disinformation campaigns. Many are already implementing dedicated strategies and technologies to combat these attacks and protect their digital assets.

Here are some of the proven methods you can use to defend your business against AI impersonation attacks, regardless of your organization's size or industry.

1) Create A Rapid Response Team (RTT)

A Rapid Response Team (RTT) is your organization's specialized defense unit against impersonation attacks and disinformation campaigns. This dedicated group ensures your business can respond immediately when threats emerge, minimizing potential damage to your reputation and operations.

Have you ever wondered how companies like Microsoft and Google are able to respond to attacks so quickly?

Well, major technology companies like them can respond to security incidents within minutes because they maintain well-trained RTT teams. When attacks target their platforms or services, these teams coordinate response efforts, communicate with stakeholders, and implement countermeasures before damage escalates.

That said, RTT teams aren't exclusive to large corporations with extensive resources. Any business with an active online presence should consider establishing some form of rapid response capability, even if it's a small team with clearly defined roles and procedures.

2) Use Social Media Security Tools

Social media platforms are primary targets for impersonators because they know they can easily create fake accounts, distribute misleading content, and target your followers with phishing messages. That’s why regular monitoring of these platforms is essential, but manual oversight is quite simply impossible when your presence grows across multiple channels.

Fortunately, there are specialized social media security tools that automate this process, continuously scanning for fake accounts, flagging suspicious activity, and alerting your team to potential threats.

These tools can also automate takedown requests and use AI and machine learning to identify and block malicious activity like spear-phishing attempts and AI-generated content.

For example, on top of doing everything we just mentioned, Spikerz strengthens your account security through centralized multi-factor authentication, access management, and continuous monitoring that prevents account takeovers before they happen.

Tools like Spikerz keep your social media protected and prevent unauthorized individuals from accessing personal details and misusing your brand assets.

3) Create An Impersonation File

An impersonation file is a record of fake accounts, fraudulent posts, screenshots, dates, and any communications targeting your business. This documentation creates a paper trail that provides concrete evidence for reporting violations to social platforms and pursuing legal action when necessary.

The main reason why businesses should create an impersonation file is because they enable rapid response and reduce damages caused by online impersonation attacks like website spoofing (fake websites), phishing emails, and fake social media profiles. These files also protect your customers by helping you quickly identify and eliminate fake accounts that could deceive your audience and damage your reputation.

When you are able to demonstrate patterns of abuse to platform administrators, they’ll be more likely to take action against bad actors. Well-maintained impersonation files reveal attack patterns, establish the scope and frequency of threats, and ensure your reports meet platform-specific guidelines for takedown requests.

Think of your impersonation file as both a legal defense tool and a reputational protection strategy that helps you respond effectively when attacks escalate beyond simple platform violations.

4) Report Impersonators

Reporting impersonators is crucial for stopping the spread of false information and protecting your brand's credibility. When fake accounts operate unchecked, they can scam your customers, ruin trust in your company, and create legal complications that go far beyond simple reputation damage.

Also, the sooner you report them, the faster social media platforms can take them down. Social media platforms receive thousands of reports daily, but they prioritize recent violations and accounts that are immediate risks to users.

But how do you find all the information you need for reporting accounts?

Platforms like Facebook, Instagram, X, and LinkedIn have specific processes for reporting impersonation that require detailed information about the fake accounts and sometimes evidence of trademark or identity violations.

Thankfully, there are tools like Spikerz that simplify this process by automatically scanning social media platforms for impersonators, collecting the necessary evidence, and helping you file takedown requests directly with the appropriate platforms. This automation ensures you don't miss emerging threats and reduces the administrative burden on your team.

5) Alert Your Audience

Transparency builds trust and protects your customers from falling victim to impersonation scams. When your business faces impersonation attacks, your audience needs clear information about the threats, especially if scammers are actively trying to contact them using your brand's identity.

Use your official communication channels (including social media accounts, company blog, website announcements, and email newsletters) to inform customers about ongoing impersonation attacks.

Quick communication helps your customers identify fake accounts before they become victims of scams that could result in financial losses or identity theft. This is also beneficial for you because people know what to look for and will become additional eyes and ears helping to identify and report new impersonation attempts.

Conclusion

AI impersonation is a growing threat that combines new technology with traditional social engineering tactics to target businesses across all industries.

The democratization of AI tools means that creating convincing impersonations no longer requires advanced technical skills or significant resources. Any motivated bad actor can now launch attacks against your business using readily available technology.

That’s precisely why your protection strategy must combine multiple approaches: establishing rapid response capabilities, implementing specialized monitoring tools, maintaining detailed documentation, quickly reporting violations, and communicating transparently with your audience.

Use tools like Spikerz to have automated protection that works around the clock to detect threats, secure your accounts, and help you respond quickly when attacks happen. Take action before attackers strike to protect your brand's reputation and your customers' trust and financial security.