Don't Let Deepfakes Kill Your Business: All You Need To Know

Don't Let Deepfakes Kill Your Business: All You Need To Know

Sooner or later, businesses will face deepfake attacks. We can clearly see that we have reached a critical inflection point where AI is fundamentally transforming phishing attacks and fraud. What once required advanced technical skills and expensive equipment now takes just a few clicks and a simple computer.

The statistics back this up. According to research from Deloitte, 25.9% of executives say their organizations have experienced one or more deepfake incidents. Even more alarming, a study cited in CFO Magazine reports that 92% of companies have experienced financial loss due to a deepfake.

In this blog post, we'll cover what deepfakes are, how the technology works, why they pose such serious threats to businesses, how to spot them, and most importantly, how to protect your organization from these attacks.

What Are Deepfakes?

Deepfake is an acronym that comes from deep learning "deep" and "fake." This technology uses artificial intelligence to create convincing images, videos, or audio of real people. The AI essentially learns to mimic someone's appearance, voice, and mannerisms by analyzing hours of existing content.

Creating convincing deepfake video content used to be very difficult, but the technology has improved so much it's hard to tell the difference. What once required specialized knowledge and powerful computers can now be accomplished with readily available apps and software. Because of this rapid advancement, experts predict that in a few years, the technology will be so advanced it's almost indistinguishable from real content.

But again, they are not perfect yet so there are ways to identify them. We'll go over how in a moment, but first let's take a look at how this technology works.

How Deepfake Technology Works

To be able to know how to protect your organization from deepfakes, you first need to understand how this technology works. Here's how the main components function:

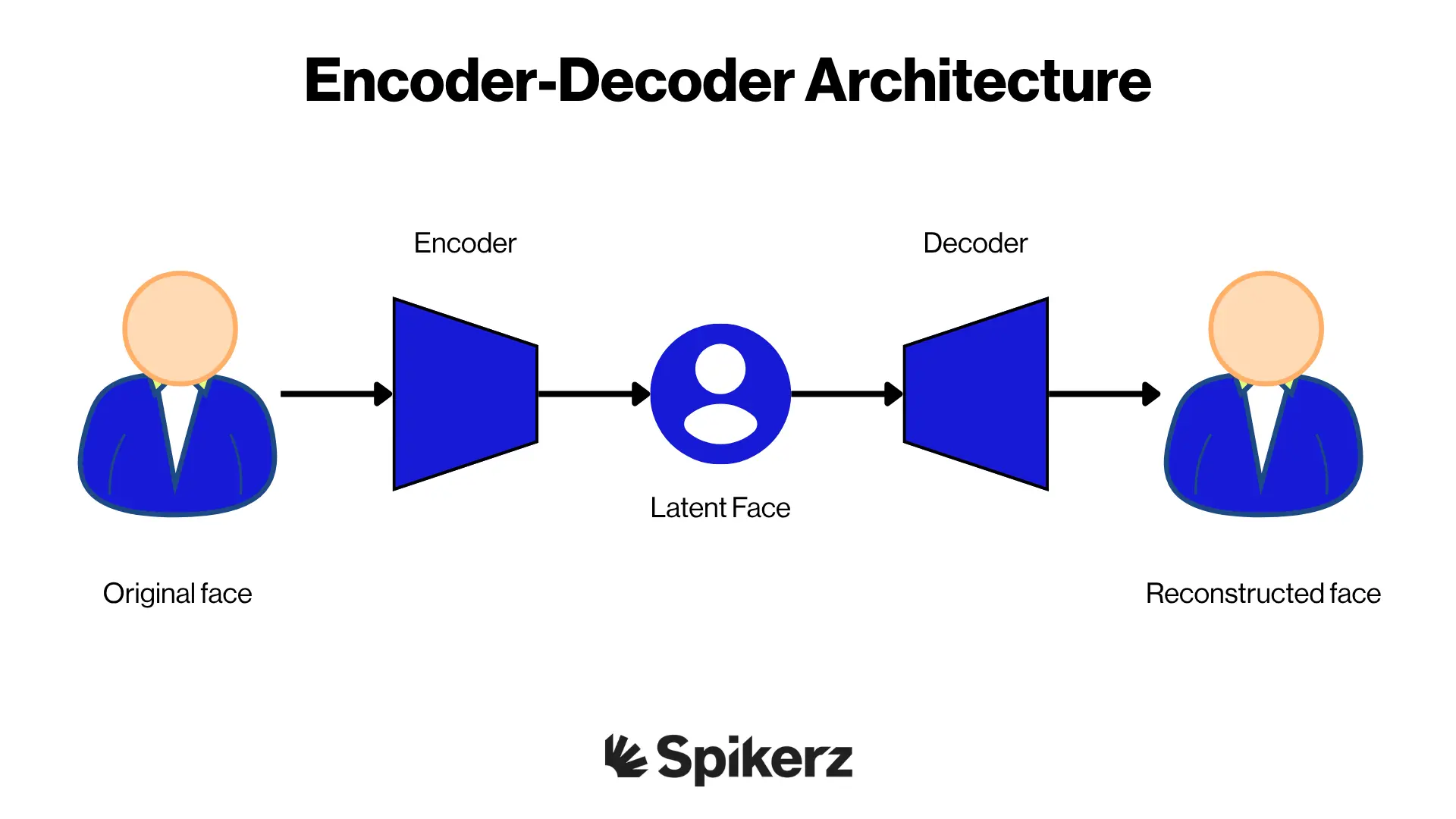

Encoder-Decoder Architecture

The encoder-decoder architecture is a framework used in machine learning to handle different types of prediction problems, particularly those involving sequence data. Think of it as an advanced translation system that converts one type of information into another.

In the context of deepfake technology, this architecture is used to manipulate or generate visual and audio content with a high degree of realism. The encoder compresses the input data into a smaller representation, while the decoder reconstructs it in the desired format. This process allows the system to learn patterns and features that can be applied to new content.

Generative Adversarial Networks (GANs)

.webp)

GANs are technically not part of the encoder-decoder architecture, but they are often used together with it to improve the quality of deepfakes. This technology is one of the most significant breakthroughs in AI-generated content creation.

A GAN consists of two competing networks:

- A “generator” (akin to the decoder) that creates images.

- A “discriminator” that evaluates their authenticity.

These two networks essentially engage in a constant battle where each tries to outsmart the other. The generator strives to produce outputs that the discriminator can't distinguish from real images, thus improving the realism of the deepfakes. This process continues until the generated content becomes nearly indistinguishable from authentic material.

Sequence to Sequence Learning

The encoder-decoder architecture is great for sequence-to-sequence learning tasks. This is particularly important when creating video deepfakes that need to maintain consistency across multiple frames.

.webp)

In the case of deepfakes, this involves mapping a sequence of input frames to a sequence of output frames, ensuring temporal coherence and maintaining the fluidity of movements and expressions across frames. Without this technology, deepfake videos would appear choppy and unrealistic as facial expressions and movements wouldn't flow naturally from one frame to the next.

.webp)

Why Are Deepfakes a Serious Threat?

The main issue with deepfakes is how they are becoming indistinguishable from real content. As such, they are being used in disinformation campaigns to deceive, mislead or confuse groups of people.

On a small scale, they are used for whaling attacks to trick employees into believing they are talking to a c-level executive to get them to send payments or personally identifiable information (PII) to third parties. At large scale, they're used to trick large groups of people into believing a counter narrative. For example, Russia is using disinformation campaigns to target EU countries to undermine democratic values and influence elections.

The good news is that organizations are already taking steps to combat disinformation campaigns with dedicated techniques and technologies. In fact, according to Gartner, demand is growing so quickly that by 2028, 50% of enterprises will adopt products, services or features specifically to address disinformation security use cases, up from less than 5% in 2024.

How to Spot Deepfakes

Deepfakes are difficult to identify but it’s not impossible. There are effective ways to spot them. In fact, Corridor Crew (a popular VFX studio) created a video showing exactly how to identify deepfakes. Here are their findings:

Check The Upload Date

The first thing anyone who suspects a video may be a deepfake should do is check the upload date. If a video was uploaded before 2023, there is a very high likelihood that the video is real and not AI generated.

This technology wasn't available back then so it's fair to say that the video is legitimate. While deepfake technology existed in research labs and limited applications before 2023, the tools and quality needed to create convincing content only became widely accessible more recently.

Count The Seconds

AI generated videos are very expensive to make so companies limit them to a few seconds in length. Each AI generated video is usually between 5 and 10 seconds so look at when transitions happen. That means look at when there's a change in scene. If there are no cuts after say 15 - 30 seconds, there's a high likelihood the video is real.

Check The Text

Take a look at the text in the background. Is it legible or is it gibberish? This simple check can immediately reveal whether you're looking at AI-generated content.

AI generated videos have all kinds of grammatical errors, misspellings in text, and in some cases text that literally makes no sense. This is because AI is trying to generate something new but it lacks the context to make it accurate. As such, it makes these kinds of mistakes all the time.

Take A Look At Their Teeth

One huge telltale of AI generated video is teeth. The reason for that is because for AI to be able to generate realistic looking teeth, it needs to look at millions of examples of people talking to get enough training data to generate something realistic.

The problem is, when people talk, we are constantly showing and hiding our teeth so AI doesn't know when to show them and when to hide them. The timing and positioning of teeth during speech creates complex patterns that current AI systems struggle to replicate accurately.

Also, each person's mouth is different so AI doesn't know what the inside of most people's mouth looks like. Because of this, it's just going to generate generic teeth that don't really match the context or the person.

Teeth tend to be very unrealistic and inconsistent so it's easy to spot them. In some frames it's blurry, in others there's no teeth, and at times it's a smile from hell. Either way, it's easy to identify.

Look For Continuity Errors

This is something that happens in Hollywood movies. Sometimes you'll see things that change from scene to scene. For example, on Terminator 2, on the first truck chase, when the truck jumps off the overpass and lands, you clearly see the windshields pop off and then immediately cut to Robert Patrick behind cracked glass.

This is a continuity error Hollywood missed and you can spot them all the time in AI generated video. Deepfake technology processes frames individually or in small groups, making it difficult to maintain consistency across longer sequences.

If you see something like this, it's an immediate red flag that tells you it's AI generated. These errors happen because the AI lacks the broader context that human editors use to maintain consistency throughout a video.

Identify Logic Problems

AI has improved so much that a lot of the time it's hard to tell the difference between real video and deepfake, but one thing it can't hide is logic problems. These issues stem from the way AI generates content without truly understanding the physical world.

For example, in AI videos, you can see how traffic makes no sense. Cars may come from one direction only to then show the next car coming in the opposite direction on the same lane. You might also notice objects that appear and disappear between frames, or people whose clothing or accessories change inexplicably.

These kinds of logic problems pop up all the time so when you see something like this, it's pretty much guaranteed that it's an AI video. The technology excels at creating realistic-looking individual elements but struggles with maintaining logical relationships between objects and scenes.

Follow Your Intuition

The last thing you can do is to follow your intuition. If you feel like something doesn't feel right, there's a reason for that. Our brains are remarkably good at detecting subtle inconsistencies that we might not be able to articulate consciously.

Listen to your intuition and pay close attention to all the tips we shared above. You'll be able to spot if it's an AI generated video or if it's real. Trust that nagging feeling when something seems off about a video, even if you can't immediately identify what's wrong.

How To Protect Your Business From Deepfakes

There are a few effective ways to protect your business from deepfakes. Here’s how to create multiple layers of defense against synthetic media attacks:

1) Protect Collaboration Tools

One of the most important things to remember is that most impersonation attempts' goal is to trick employees into revealing sensitive company information or to make unauthorized payments. Deepfakes are increasingly being used to improve traditional social engineering attacks.

That's why we need to protect our communication tools as much as possible from synthetic media like deepfakes. That includes but it's not limited to workforce collaboration tools (Slack and Microsoft Teams), call centers, mobile phones, email, and social media (direct messages). Each of these channels is a potential entry point for deepfake-enhanced attacks.

2) Always Evaluate Third Party Content

Always evaluate content originating outside of your organization for authenticity and accuracy before taking action. This verification step is critical when dealing with video calls, voice messages, or any content that could potentially be synthetic.

Reach out to the "sender" through other official channels to confirm this request is legitimate and not a scam. For example, if you are receiving an email from your CEO asking for a payment, contact them through Slack or Teams for confirmation. Or even better, their personal phone number if you have it.

3) Validate Claims

If someone from your team or customer base alerts you to a possible impersonation, you should monitor all content submitted as evidence to support the claim for signs that it was artificially generated or manipulated using software tools. Don't take any claims at face value, regardless of how convincing the supporting material looks.

This will make it easier to train employees later on on how to spot fakes and to contact social platforms to have them take down those accounts. Building this verification muscle within your organization helps create a culture of healthy skepticism around digital content.

4) Verify Identities

Protect your company and accounts against attempts to bypass biometric authentication using synthetic media. As deepfake technology improves, traditional identity verification methods become more vulnerable to attacks.

For example, one thing social media platforms do when you lose access to your accounts is ask you to record a video of you turning your face from left to right and move it from up to down. This multi-angle verification helps distinguish between real faces and synthetic media.

This is because synthetic media like deepfakes aren't great at creating profiles, they still struggle a lot at generating those details. This simple test gives them away as being fake, but things are changing as deepfakes get better and better so this method may not work for much longer.

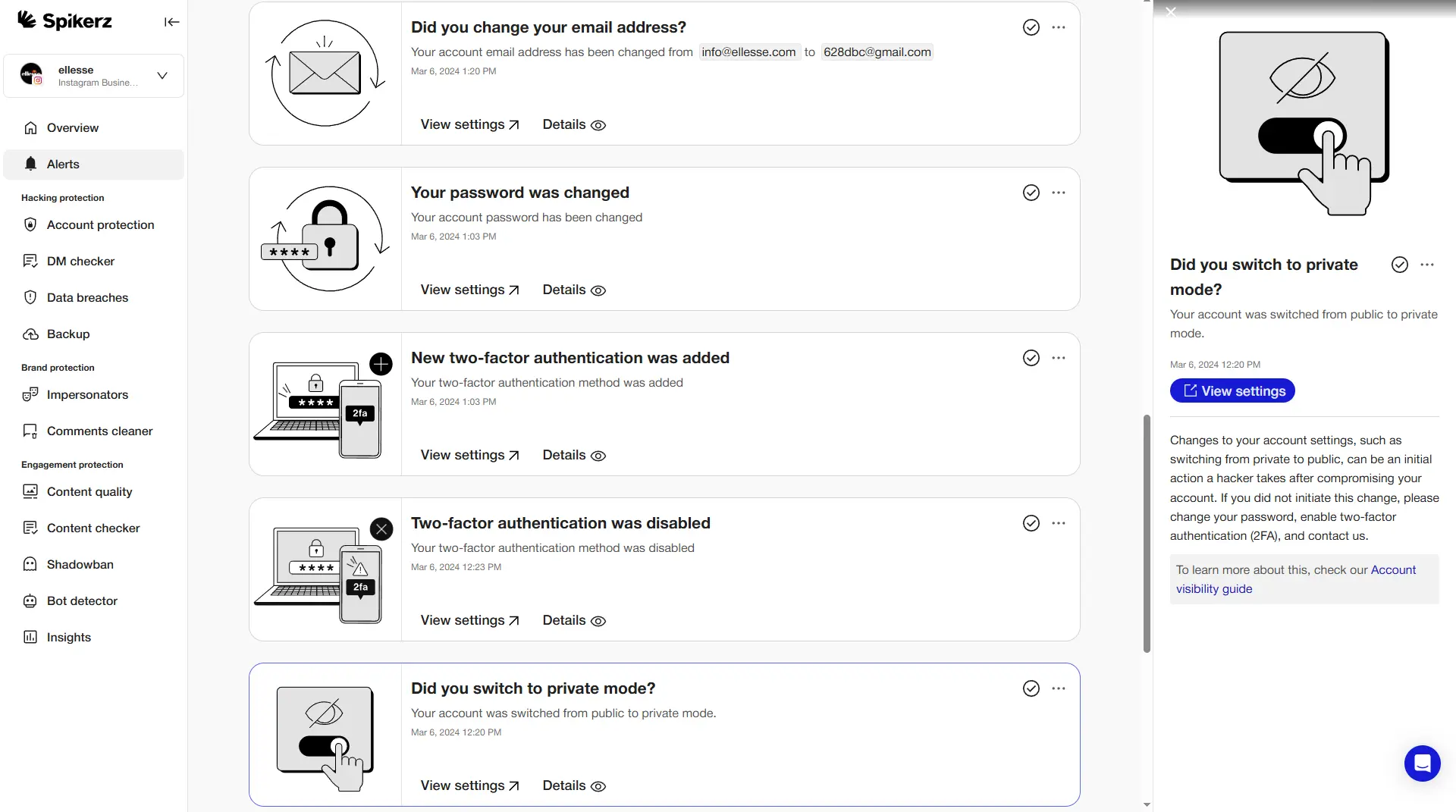

5) Account Takeover Protection

Businesses should do everything they can to prevent attackers from using malware and social engineering attacks to steal login credentials. Once attackers gain access to your accounts, they can use them to distribute deepfake content or launch other social engineering attacks from trusted sources.

Once attackers bypass authentication controls, they often take over accounts to sell them, hold them for ransom, or to try to scam the business' audience. This is something we see all the time, this past month alone Xbox, The NY Post, and the UEFA Champions League were hacked to scam users by selling them crypto meme coins. Imagine how much more effective these scams would be with deepfake videos of celebrities or executives endorsing these schemes.

The good news is that there are multiple ways to protect your business from these types of attacks like antivirus software and social media security tools. Antivirus software helps you protect local devices from malware, think of it as your first line of defense. If attackers breach this layer of protection, you have your second layer, social media security tools.

Social media security tools like Spikerz help you monitor your social accounts for account breaches, phishing messages, bot attacks, and more. When these tools detect a successful breach, they automatically kick out the intruder, change your password, and alert you to the issue.

6) Brand Impersonation

One of the big use cases for deepfakes is impersonating C-level executives and brands. The technology allows attackers to create convincing video and audio content that appears to come from trusted sources, making their impersonation attempts far more credible.

For example, in early 2024, a finance employee in Hong Kong was deceived into wiring $25 million to criminals after participating in a deepfake video conference with executives who looked real but were entirely synthetic.

That's why you should monitor social media for impersonators that may be misusing your brand assets or using deepfakes of your company's leadership to try to trick your employees or customers.

As soon as you find impersonators, report them to stop the spread of false information and protect your brand's credibility. The sooner you report them, the faster social media platforms can take them down.

7) Phishing Monitoring

Phishing is the most common attack vector criminals use to start contact with potential victims. The problem is that deepfake technology is making these attacks more sophisticated and harder to detect.

What's particularly concerning is that according to research from Deloitte, 25.9% of executives say their organizations have experienced one or more deepfake incidents. And a study cited in CFO Magazine reports even higher numbers, stating that 92% of companies have experienced financial loss due to a deepfake.

So make sure you have a monitoring tool analyzing your email, social media messages, and comments to avoid falling victim to these scams. Modern phishing attacks enhanced with deepfake technology are a new level of threat that requires specialized detection and response capabilities.

Conclusion

Deepfakes are a fundamental shift in the cybersecurity landscape, transforming how attackers impersonate executives, manipulate employees, and deceive customers. With 92% of companies already experiencing financial losses from deepfake attacks, this isn't a future threat, it's happening right now.

The technology's rapid evolution means that today's detection methods may become obsolete tomorrow. That's why your defense strategy must focus on processes and verification procedures rather than relying solely on your ability to spot synthetic content. That said, if you implement multiple layers of protection (from securing collaboration tools to monitoring for brand impersonation) you’ll create a robust defense against these attacks.

Your business has spent years building trust with customers, employees, and partners. Don't let deepfake attackers destroy that trust in a matter of minutes. Start protecting your digital presence today with social media security tools that monitor for impersonation, prevent account takeovers, and help you respond quickly when threats emerge. The cost of prevention is always less than the price of recovery.