Hate Speech in 2026 And How AI Moderation Can Help

Hate Speech in 2025 And How AI Moderation Can Help

The internet has given everyone a platform to speak their mind. But somewhere along the way, many people started believing that having a voice means saying whatever they want to whoever they want, without any consequences. You’ve probably seen it yourself: comment sections filled with slurs, threats, and hate directed at people simply because of who they are.

But this shift didn’t happen overnight. Years of unchecked behavior online have created an environment where hate speech feels normal to some users. But just because it’s become common to some degree, it doesn’t mean your brand or business should accept it.

In this post, we’ll go over what hate speech really is, examine how widespread this problem has become, and discuss how social media platforms have made things worse. Most importantly, we’ll show you what your business can do to protect your community (even when governments aren’t stepping in to help.)

What Is Hate Speech?

Hate speech refers to any communication that attacks or demeans a person or group based on attributes like race, religion, ethnicity, gender, sexual orientation, or disability. Online, this takes many forms: direct attacks in comment sections, coordinated harassment campaigns, dehumanizing language in posts, and even coded messages that fly under basic moderation systems.

The biggest issue with hate speech is that consequences go far beyond hurt feelings. It’s been linked to a global increase in violence toward minorities, including mass shootings, lynchings, and ethnic cleansing. When hateful rhetoric spreads unchecked on social platforms, it creates an environment where real-world violence becomes more likely and more accepted.

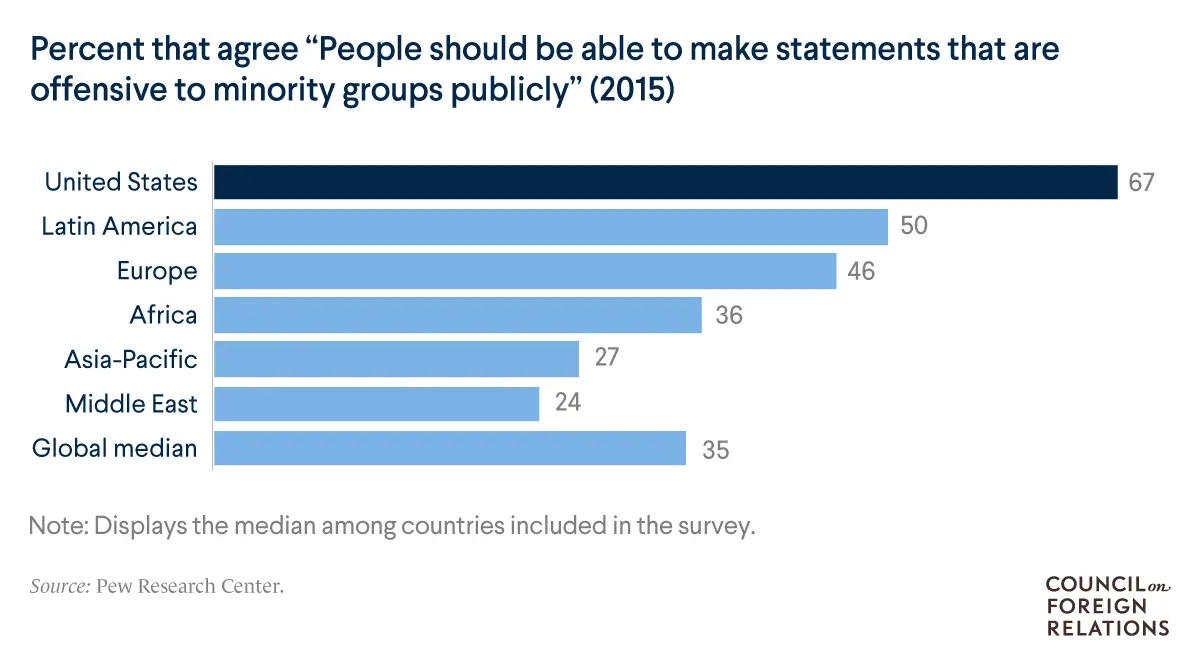

The challenge is that policies used to curb hate speech risk, as a result they would limit free speech, but even then, they are inconsistently enforced. Countries like the United States grant social media companies broad powers in managing their content and enforcing hate speech rules. Others, including Germany, can force companies to remove posts within certain time periods. This patchwork approach means businesses operating across multiple countries face different standards and enforcement mechanisms, so what can you do about it?

How Widespread Is The Problem?

Hate speech isn’t isolated to one region or platform. Incidents have been reported on nearly every continent, affecting communities across cultural and geographic boundaries. What starts as online harassment often escalates into real harm. For example:

Online extremist narratives have been linked to abhorrent real-world events, including hate crimes. In Germany, researchers found a correlation between anti-refugee Facebook posts by the far-right Alternative for Germany party and attacks on refugees. Scholars Karsten Muller and Carlo Schwarz observed that upticks in attacks, such as arson and assault, followed spikes in hate-mongering posts.

The United States has seen similar patterns. Perpetrators of recent white supremacist attacks have circulated among racist communities online and embraced social media to publicize their acts. Prosecutors said the Charleston church shooter, who killed nine black clergy and worshippers in June 2015, engaged in a “self-learning process” online that led him to believe that the goal of white supremacy required violent action.

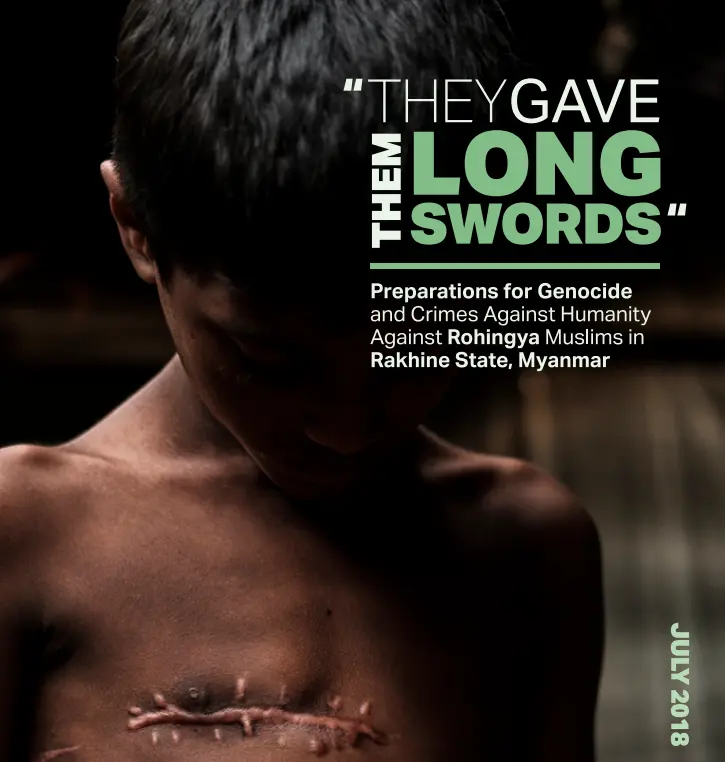

Another clear example was In Myanmar, military leaders and Buddhist nationalists used social media to slur and demonize the Rohingya Muslim minority ahead of and during a campaign of ethnic cleansing. Though Rohingya comprised perhaps 2 percent of the population, ethnonationalists claimed that Rohingya would soon supplant the Buddhist majority. The UN fact-finding mission said, “Facebook has been a useful instrument for those seeking to spread hate, in a context where, for most users, Facebook is the Internet.”

How Has Social Media Exacerbated The Problem?

Social media promised to connect the world and give everyone a voice. Instead, it created echo chambers where hate can spread faster than ever before. Cyberspace offers freedom of communication and opinion expressions, but the current social media landscape is regularly being misused to spread violent messages, comments, and hateful speech.

In the European Union, 80% of people have encountered hate speech online and 40% have felt attacked or threatened via Social Network Sites. And these aren’t isolated incidents, they represent a systemic problem affecting millions of users daily.

A study by Sergio Andrés Castaño-Pulgarín, Natalia Suárez-Betancur, and other colleagues in 2021 found serious consequences of online hate speech. Among its main consequences, researchers identified harm against social groups by creating an environment of prejudice and intolerance, fostering discrimination and hostility, and in severe cases facilitating violent acts.

The study also documented impoliteness, pejorative terms, vulgarity, and sarcasm as common manifestations, along with incivility that includes behaviors threatening democracy, denying people their personal freedoms, or stereotyping social groups.

What’s worse is that the research showed that online hate speech often translates into offline violence. Direct aggressions have been reported against political ideologies, religious groups, and ethnic minorities. For example, racial and ethnic-centered rumors can lead to ethnic violence, and offended individuals might be threatened because of their group identities.

What Can Brands And Businesses Do About This?

You might think hate speech moderation is someone else’s problem (something for governments or platform owners to handle). But your business has both the power and the responsibility to create safe spaces for your community. Even if governments don’t have laws that protect groups against hate speech, you can still build environments where your customers feel safe enough to engage.

Your brand’s comment sections, direct messages, and community spaces reflect who you are and what you stand for. When you allow hate speech to flourish, you’re telling vulnerable community members they’re not welcome. And when you actively moderate and remove harmful content, you’re showing everyone that your brand values safety and respect.

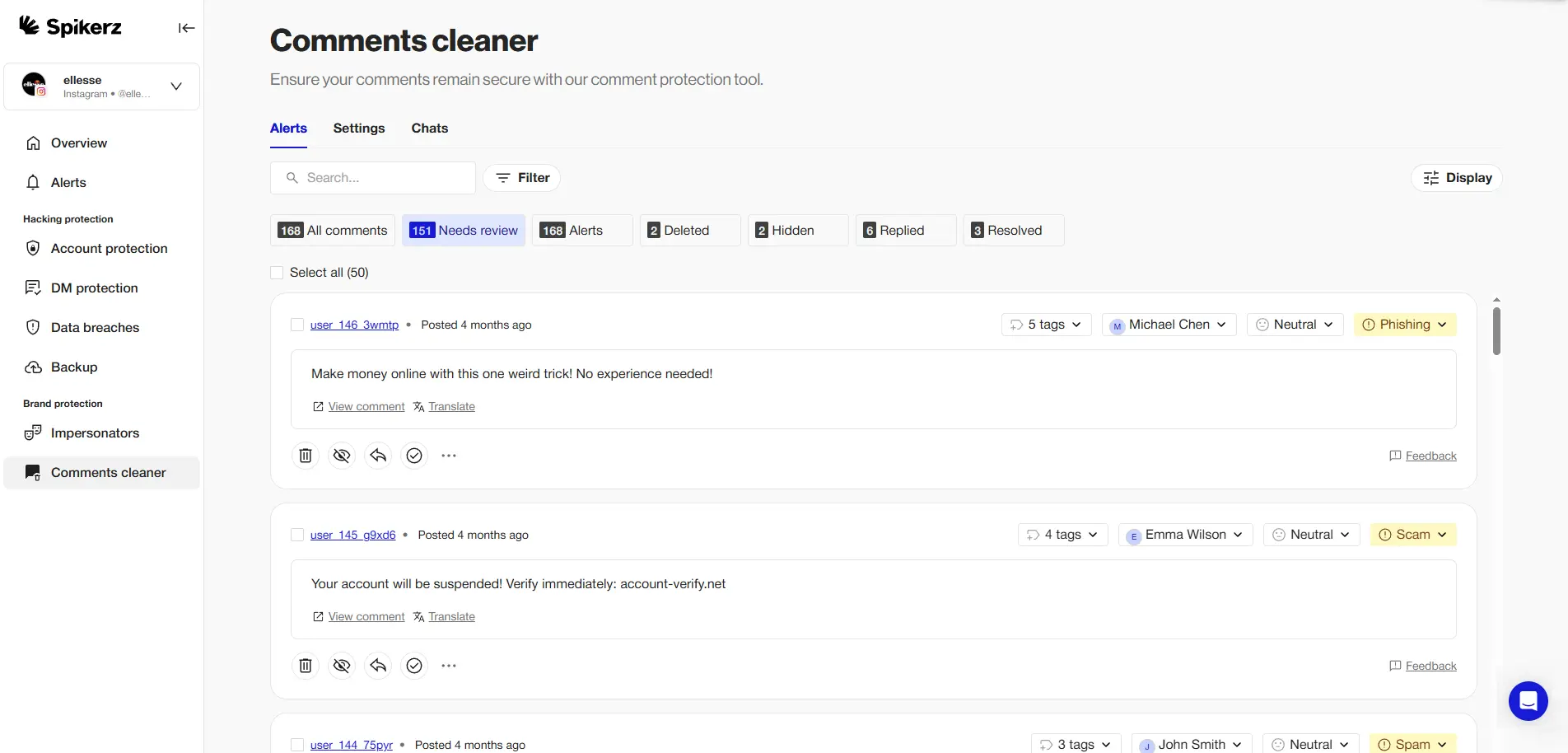

The good news is that tools like Spikerz make comment moderation easier than ever before. While normally basic keyword filters can be bypassed with creative spelling or coded language, Spikerz uses AI-powered comment management to understand context and intent. For example, the system identifies hate speech even when it doesn’t contain explicit slurs, catching the subtle attacks that slip through traditional filters.

Its AI analyzes user behavior patterns to identify repeat offenders, bot networks spreading hate, and coordinated harassment campaigns targeting your followers. You can also customize filters based on your specific community needs, removing content that violates your standards before it causes harm.

At the end of the day, brands and businesses need to take care of their communities so they feel safe enough to engage with other people, your products, and your services. A toxic environment drives away customers and damages your reputation. But if you invest in proper moderation, you won’t just be protecting people from hate speech, you’ll protect the community you’ve worked hard to build.

Conclusion

Hate speech in 2025 is one of the most serious threats to online communities and real-world safety. Unfortunately, what happens online doesn’t always stay online. From attacks on refugees in Germany to mass shootings in American synagogues and churches, hate speech has direct and devastating consequences for vulnerable communities.

What’s worse is that social media platforms have amplified this problem, creating spaces where hate can spread faster than ever before. It’s a systemic problem that demands urgent action.

Your business can’t afford to wait for governments or platforms to solve this problem. You need to take action now to protect your community.

The good news is there are tools that exist to moderate hate speech effectively without constant manual oversight. For example, AI-powered solutions like Spikerz understand context, catch subtle attacks, and work continuously to keep your spaces safe.

When you create an environment where people feel protected and respected, they’ll reward you with loyalty, engagement, and trust. That’s not just good ethics, it’s good business.